Datatask a fait le choix de porter sa plateforme sur le cloud provider Scaleway. Au delà de nous permettre de satisfaire à des contraintes fortes de Souverainenté, ce cloud provider nous a séduit par la richesse de ses composants et sa capacité à fournir un outillage autour de sa plateforme. La capacité à déployer une infrastructure de manière quasi instantanée (quelques minutes) et répetable est un enjeu majeur pour nous. C'est naturellement avec Terraform que ce provisionning est réalisé.

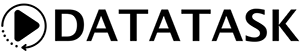

Nous allons voir comment déployer une infrastructure durable et sécurisée à l’aide d’un private network sur Scaleway avec Terraform. Cette infrastructure sera composée des éléments suivants :

- Une API

- Une instance PostgreSQL

- Un VPC

- Une Public Gateway

- Un Load Balancer

- Un Private Network

Avant de commancer, un peu de contexte

Pour cet exercice, j’ai créé une simple API nommée "team-builder" qui permet de lister et d’ajouter des employés dans une base de données. Voici les routes qui la composent :

- GET /employee/list

- POST /employee/add

- GET /health

Les composants que nous allons installer seront agencés de la manière suivante :

Comme on peut le voir, le réseau est hermétique et aucune connexion ne peut être effectuée vers la base de données depuis l’extérieur. Les seuls points d’entrée sont l’IP du load balancer pour le trafic http, ainsi que l’IP de la public gateway pour l’accès à l’instance en SSH. Toute communication entre les composants se fait par le biais du private network.

Pré-requis

Avant de pouvoir déployer une infrastructure sur Scaleway, vous devez avoir validé les pré-requis suivants :

Avoir un projet Scaleway

Il peut être créé un niveau de l'organisationDisposer d'une clé d'API

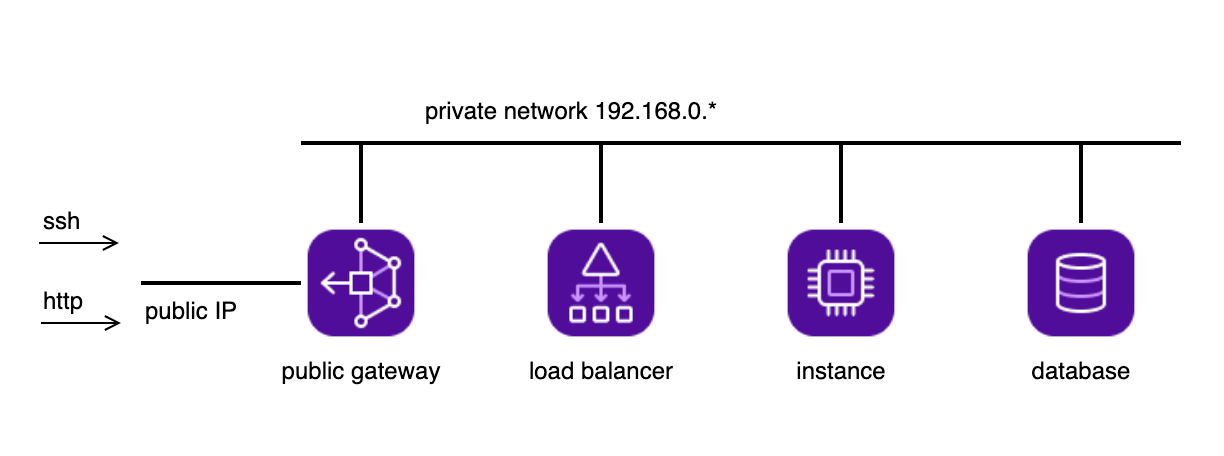

Afin que Terraform puisse créer des composants sur votre projet Scaleway, il faut lui fournir une clé d'API avec des droits suffisants.- Pour cela, vous pouvez commencer par créer une application dans la section IAM

iam : application

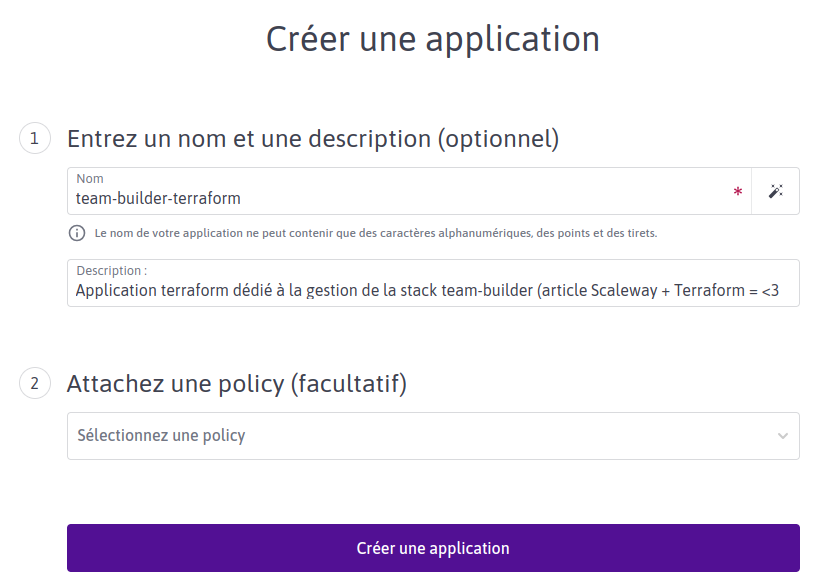

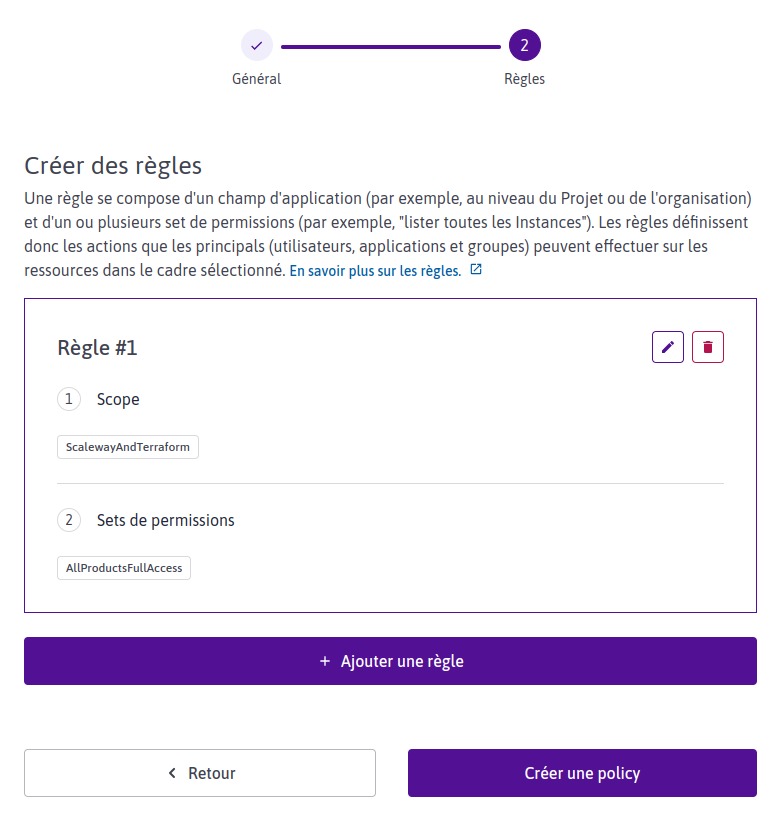

- Puis créer une policy ayant le droit "AllProductsFullAccess" sur votre projet.

iam : policy : etape 1

iam : policy : etape 2

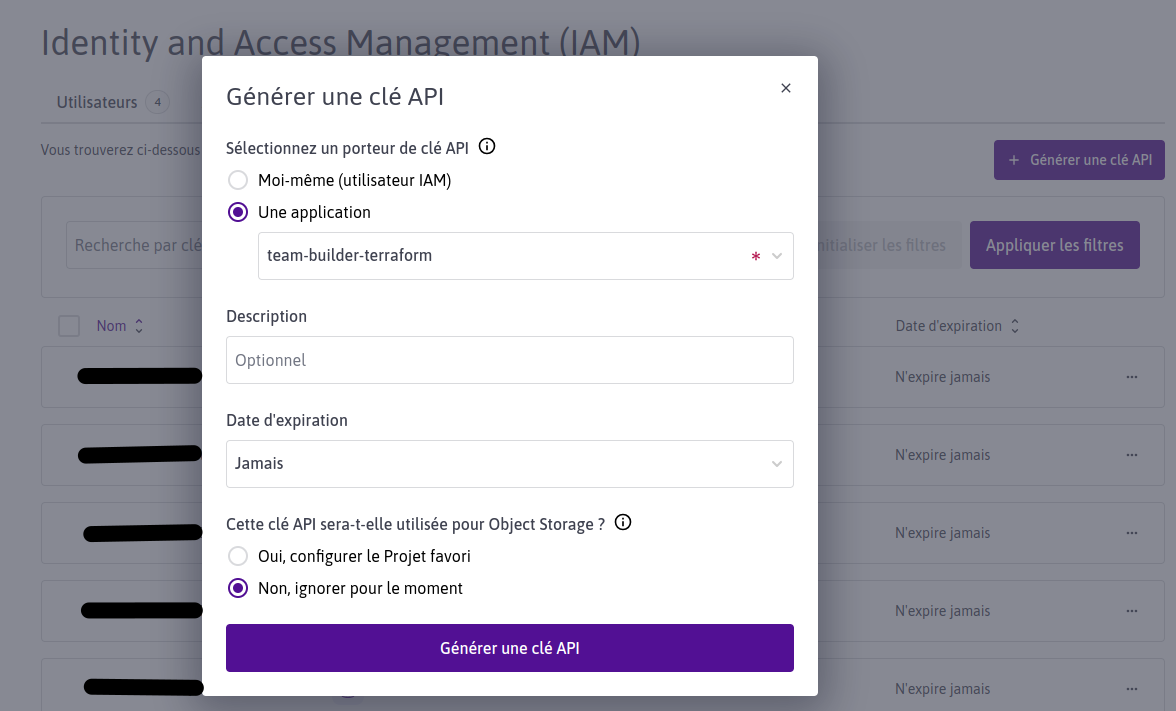

- Et générer une clé d'API reliée à votre application.

iam : api keys

export TF_VAR_scw_access_key="xx xxxxxxxxxxxxxxxxxx"

export TF_VAR_scw_secret_key="xx xxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx"

export TF_VAR_scw_organisation="xx xxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx"

export TF_VAR_scw_project="xx xxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx"

export TF_VAR_scw_region="xxxxx"

export TF_VAR_scw_zone="xxxxx"

Ces variables serviront par la suite à la configuration du provider Scaleway de Terraform.

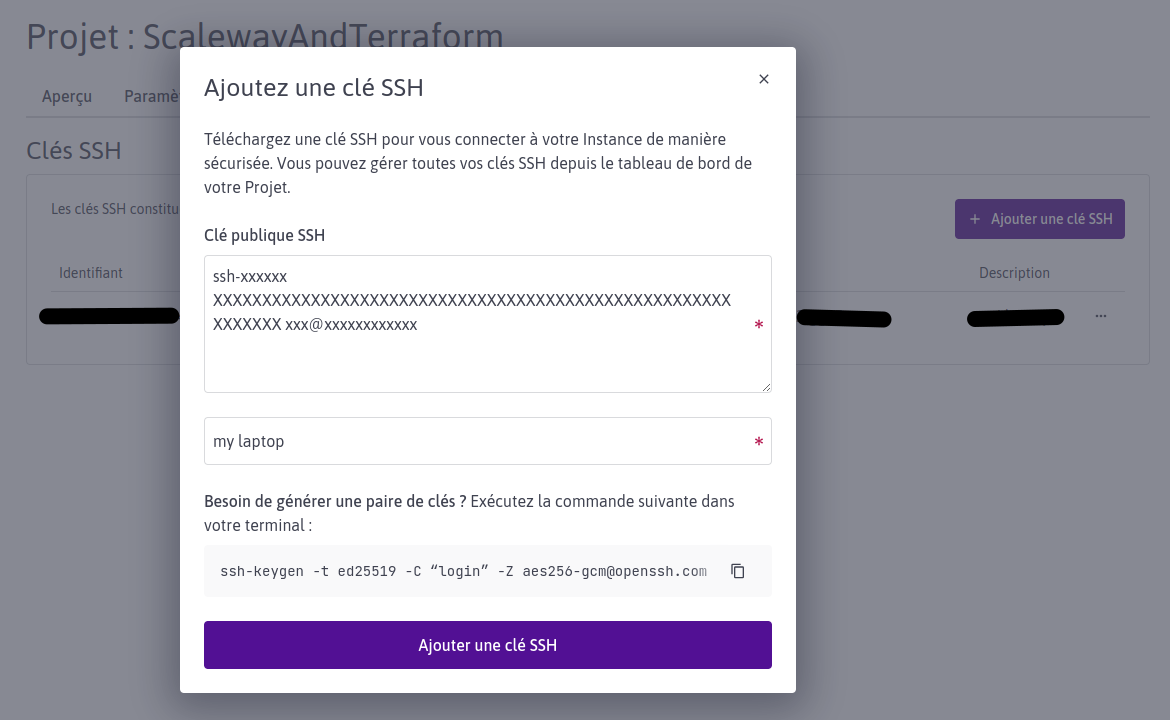

Bien entendu, si vous disposez d'un compte Scaleway ayant des droits suffisants, vous pouvez aussi directement créer une clé d'API reliée à votre compte, mais il est plus convenable d'avoir une application spécifique pour Terraform.Ajouter sa clé SSH publique au projet Scaleway

Pour se connecter en SSH aux instances créées, il faut ajouter notre clé publique au projet pour qu’elle soit injectée automatiquement au démarrage des instances. Si vous n'avez pas encore créé votre clé ssh, voici le moyen d'y parvenir : how-to create-ssh-keyUne fois générée, ajouter la clé publique à votre projet

project : ssh keys

Terraform en version > 1.0

Voici un lien pour l'installerProjet Terraform

Commençons par créer un dossier pour notre projet Terraform

mkdir "team-builder-terraform" && cd "$_"

Ensuite les différents fichiers des composants de notre infrastructure

touch main.tf variables.tf vpc.tf database.tf instance.tf load_balancer.tf

main.tf

terraform {

required_version = "~>1.0"

required_providers {

scaleway = {

source = "scaleway/scaleway"

version = "2.16.3"

}

}

}

provider "scaleway" {

access_key = var.scw_access_key

secret_key = var.scw_secret_key

organization_id = var.scw_organisation

project_id = var.scw_project

zone = var.scw_zone

region = var.scw_region

}

variables.tf

#

## scaleway

#

variable "scw_access_key" {

type = string

}

variable "scw_secret_key" {

type = string

}

variable "scw_organisation" {

type = string

}

variable "scw_project" {

type = string

}

variable "scw_region" {

type = string

}

variable "scw_zone" {

type = string

}

#

## db postgreSQL

#

variable "db_instance_node_type" {

default = "DB-DEV-S"

type = string

}

variable "db_instance_admin_user_name" {

type = string

}

variable "db_instance_admin_password" {

type = string

}

variable "db_instance_volume_size_in_gb" {

default = 20

type = number

}

variable "db_instance_team_builder_user_name" {

type = string

}

variable "db_instance_team_builder_password" {

type = string

}

#

## team-builder instance

#

variable "team_builder_instance_type" {

default = "PLAY2-NANO"

type = string

}

variable "team_builder_instance_root_volume_size_in_gb" {

default = 10

type = number

}

#

## team-builder container

#

variable "team_builder_container_image" {

type = string

}

variable "team_builder_container_db_schema" {

type = string

}

variable "team_builder_container_db_employees_table" {

type = string

}

#

## load balancer

#

variable "my_company_lb_type" {

default = "LB-S"

type = string

}

#

## ssh

#

variable "ssh_private_key_path" {

default = "~/.ssh/id_ed25519"

type = string

}

vpc.tf

resource "scaleway_vpc_private_network" "my_company_pn" {

name = "my-company-pn"

}

resource "scaleway_vpc_public_gateway_dhcp" "my_company_dhcp" {

subnet = "192.168.0.0/24"

push_default_route = true

enable_dynamic = false

pool_low = "192.168.0.20"

pool_high = "192.168.0.249"

}

resource "scaleway_vpc_public_gateway_ip" "my_company_pg_ip" {}

resource "scaleway_vpc_public_gateway" "my_company_pg" {

name = "my-company-pg"

type = "VPC-GW-S"

ip_id = scaleway_vpc_public_gateway_ip.my_company_pg_ip.id

}

resource "scaleway_vpc_gateway_network" "my_company_gn" {

gateway_id = scaleway_vpc_public_gateway.my_company_pg.id

private_network_id = scaleway_vpc_private_network.my_company_pn.id

dhcp_id = scaleway_vpc_public_gateway_dhcp.my_company_dhcp.id

cleanup_dhcp = true

enable_masquerade = true

depends_on = [

scaleway_vpc_public_gateway_ip.my_company_pg_ip,

scaleway_vpc_public_gateway.my_company_pg,

scaleway_vpc_private_network.my_company_pn

]

}

resource "scaleway_vpc_public_gateway_dhcp_reservation" "my_company_pg_dhcp_res_team_builder_instance" {

gateway_network_id = scaleway_vpc_gateway_network.my_company_gn.id

mac_address = scaleway_instance_private_nic.team_builder_instance_pnic01.mac_address

ip_address = "192.168.0.10"

depends_on = [

scaleway_vpc_public_gateway_dhcp.my_company_dhcp,

scaleway_vpc_gateway_network.my_company_gn,

scaleway_instance_private_nic.team_builder_instance_pnic01

]

}

resource "scaleway_vpc_public_gateway_pat_rule" "my_company_pg_pat_rule_team_builder_instance_ssh" {

gateway_id = scaleway_vpc_public_gateway.my_company_pg.id

private_ip = scaleway_vpc_public_gateway_dhcp_reservation.my_company_pg_dhcp_res_team_builder_instance.ip_address

private_port = 22

public_port = 2202

protocol = "tcp"

depends_on = [

scaleway_vpc_gateway_network.my_company_gn,

scaleway_vpc_private_network.my_company_pn

]

}

database.tf

resource "scaleway_rdb_instance" "my_company_db_instance" {

name = "database"

node_type = var.db_instance_node_type

engine = "PostgreSQL-14"

is_ha_cluster = false

user_name = var.db_instance_admin_user_name

password = var.db_instance_admin_password

volume_type = "bssd"

volume_size_in_gb = var.db_instance_volume_size_in_gb

disable_backup = false

backup_schedule_frequency = 24 # every day

backup_schedule_retention = 7 # keep it one week

private_network {

ip_net = "192.168.0.254/24" #pool high

pn_id = scaleway_vpc_private_network.my_company_pn.id

}

}

resource "scaleway_rdb_acl" "my_private_network_acl" {

instance_id = scaleway_rdb_instance.my_company_db_instance.id

acl_rules {

ip = "192.168.0.0/24"

description = "my_private_network"

}

}

resource "scaleway_rdb_database" "db" {

instance_id = scaleway_rdb_instance.my_company_db_instance.id

name = "companies"

}

resource "scaleway_rdb_user" "db_team_builder_user" {

instance_id = scaleway_rdb_instance.my_company_db_instance.id

name = var.db_instance_team_builder_user_name

password = var.db_instance_team_builder_password

is_admin = false

}

resource "scaleway_rdb_privilege" "db_admin_user_privilege" {

instance_id = scaleway_rdb_instance.my_company_db_instance.id

user_name = var.db_instance_admin_user_name

database_name = scaleway_rdb_database.db.name

permission = "all"

depends_on = [

scaleway_rdb_instance.my_company_db_instance,

scaleway_rdb_database.db

]

}

resource "scaleway_rdb_privilege" "db_team_builder_user_privilege" {

instance_id = scaleway_rdb_instance.my_company_db_instance.id

user_name = var.db_instance_team_builder_user_name

database_name = scaleway_rdb_database.db.name

permission = "all"

depends_on = [

scaleway_rdb_instance.my_company_db_instance,

scaleway_rdb_database.db,

scaleway_rdb_user.db_team_builder_user

]

}

instance.tf

resource "scaleway_instance_security_group" "my_company_security_group" {

name = "my-company-sg"

inbound_default_policy = "drop"

external_rules = true

}

resource "scaleway_instance_server" "team_builder_instance" {

name = "team-builder"

type = var.team_builder_instance_type

zone = var.scw_zone

image = "ubuntu_focal"

security_group_id = scaleway_instance_security_group.my_company_security_group.id

tags = ["team-builder"]

root_volume {

size_in_gb = var.team_builder_instance_root_volume_size_in_gb

delete_on_termination = true

}

}

resource "scaleway_instance_private_nic" "team_builder_instance_pnic01" {

server_id = scaleway_instance_server.team_builder_instance.id

private_network_id = scaleway_vpc_private_network.my_company_pn.id

depends_on = [

scaleway_instance_server.team_builder_instance

]

}

resource "time_sleep" "wait_30_seconds_after_team_builder_instance_network_setup" {

create_duration = "30s"

depends_on = [

scaleway_instance_server.team_builder_instance,

scaleway_lb.my_company_lb,

scaleway_vpc_public_gateway.my_company_pg,

scaleway_vpc_public_gateway_dhcp_reservation.my_company_pg_dhcp_res_team_builder_instance,

scaleway_vpc_public_gateway_pat_rule.my_company_pg_pat_rule_team_builder_instance_ssh

]

}

resource "null_resource" "reboot_team_builder_instance_after_network_setup" {

triggers = {

my_company_pg_dhcp_res_team_builder_instance_id = scaleway_vpc_public_gateway_dhcp_reservation.my_company_pg_dhcp_res_team_builder_instance.id

}

provisioner "local-exec" {

command = <<EOF

curl -X POST \

-H "X-Auth-Token: ${var.scw_secret_key}" \

-H "Content-Type: application/json" \

-d '{"action": "reboot"}' \

https://api.scaleway.com/instance/v1/zones/${var.scw_zone}/servers/${split("/", scaleway_instance_server.team_builder_instance.id)[1]}/action

EOF

}

depends_on = [

time_sleep.wait_30_seconds_after_team_builder_instance_network_setup

]

}

resource "time_sleep" "wait_30_seconds_after_team_builder_instance_reboot" {

create_duration = "30s"

depends_on = [

null_resource.reboot_team_builder_instance_after_network_setup

]

}

resource "null_resource" "team_builder_install" {

connection {

type = "ssh"

host = scaleway_vpc_public_gateway_ip.my_company_pg_ip.address

port = 2202

user = "root"

private_key = file(var.ssh_private_key_path)

}

provisioner "file" {

source = "./scripts/install-docker.sh"

destination = "/tmp/install-docker.sh"

}

provisioner "remote-exec" {

inline = [

"chmod +x /tmp/install-docker.sh",

"/tmp/install-docker.sh"

]

}

depends_on = [

time_sleep.wait_30_seconds_after_team_builder_instance_reboot

]

}

resource "null_resource" "team_builder_run" {

triggers = {

image = var.team_builder_container_image

db_host = scaleway_rdb_instance.my_company_db_instance.private_network.0.ip

db_port = scaleway_rdb_instance.my_company_db_instance.private_network.0.port

db_user = var.db_instance_team_builder_user_name

db_password = var.db_instance_team_builder_password

db_name = scaleway_rdb_database.db.name

db_schema = var.team_builder_container_db_schema

db_employees_table = var.team_builder_container_db_employees_table

}

connection {

type = "ssh"

host = scaleway_vpc_public_gateway_ip.my_company_pg_ip.address

port = 2202

user = "root"

private_key = file(var.ssh_private_key_path)

}

provisioner "remote-exec" {

inline = [

"mkdir -p /opt/team-builder"

]

}

provisioner "file" {

content = templatefile("templates/team-builder/docker-compose.yml", {

image = var.team_builder_container_image

})

destination = "/opt/team-builder/docker-compose.yml"

}

provisioner "file" {

content = templatefile("templates/team-builder/team-builder.env", {

db_host = scaleway_rdb_instance.my_company_db_instance.private_network.0.ip

db_port = scaleway_rdb_instance.my_company_db_instance.private_network.0.port

db_user = var.db_instance_team_builder_user_name

db_password = var.db_instance_team_builder_password

db_name = scaleway_rdb_database.db.name

db_schema = var.team_builder_container_db_schema

db_employees_table = var.team_builder_container_db_employees_table

})

destination = "/opt/team-builder/team-builder.env"

}

provisioner "remote-exec" {

inline = [

"docker compose -f /opt/team-builder/docker-compose.yml up -d"

]

}

depends_on = [

null_resource.team_builder_install

]

}

load_balancer.tf

resource "scaleway_lb_ip" "my_company_lb_ip" {}

resource "scaleway_lb" "my_company_lb" {

name = "load-balancer"

ip_id = scaleway_lb_ip.my_company_lb_ip.id

zone = var.scw_zone

type = var.my_company_lb_type

private_network {

private_network_id = scaleway_vpc_private_network.my_company_pn.id

dhcp_config = true

}

depends_on = [

scaleway_vpc_public_gateway.my_company_pg

]

}

resource "scaleway_lb_backend" "my_company_lb_backend" {

lb_id = scaleway_lb.my_company_lb.id

name = "team-builder-backend"

forward_protocol = "http"

forward_port = "8080"

server_ips = [scaleway_vpc_public_gateway_dhcp_reservation.my_company_pg_dhcp_res_team_builder_instance.ip_address]

health_check_http {

uri = "http://${scaleway_lb_ip.my_company_lb_ip.ip_address}/health"

method = "GET"

code = 200

}

depends_on = [

scaleway_instance_server.team_builder_instance

]

}

resource "scaleway_lb_frontend" "my_company_lb_frontend" {

lb_id = scaleway_lb.my_company_lb.id

backend_id = scaleway_lb_backend.my_company_lb_backend.id

name = "team-builder-frontend"

inbound_port = "80"

}

Scripts

Création du script permettant d’installer "docker" sur les instances Scaleway.mkdir -p scripts && touch scripts/install-docker.sh

scripts/install-docker.sh

#!/bin/bash

apt-get update -y

apt-get install ca-certificates curl gnupg -y

install -m 0755 -d /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | gpg --dearmor -o /etc/apt/keyrings/docker.gpg --yes

chmod a+r /etc/apt/keyrings/docker.gpg

echo \

"deb [arch="$(dpkg --print-architecture)" signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \

"$(. /etc/os-release && echo "$VERSION_CODENAME")" stable" | \

tee /etc/apt/sources.list.d/docker.list > /dev/null

apt-get update -y

apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin -y

Templates

Création du `docker-compose.yml` et de son environnement qui vont être téléversés dans l'instance afin d'y exécuter l'API.mkdir -p templates/team-builder

&& touch templates/team-builder/docker-compose.yml templates/team-builder/team-builder.env

templates/team-builder/docker-compose.yml

version: "3.9"

services:

team-builder:

image: ${image}

container_name: team-builder

ports:

- "8080:8080"

restart: always

env_file:

- team-builder.env

templates/team-builder/team-builder.env

PORT='8080'

GIN_MODE='release'

DB_HOST='${db_host}'

DB_PORT='${db_port}'

DB_USER='${db_user}'

DB_PASSWORD='${db_password}'

DB_NAME='${db_name}'

DB_SCHEMA='${db_schema}'

DB_EMPLOYEES_TABLE='${db_employees_table}'

tfvars

Création du fichier `my_company.tfvars` contenant les variables qui vont être injectées lors de l'exécution du projet Terraform.mkdir -p tfvars && touch tfvars/my_company.tfvars

tfvars/my_company.tfvars

# db postgreSQL instance

db_instance_node_type = "DB-DEV-S"

db_instance_admin_user_name = "h4pHTj2d33F2JgDdC2"

db_instance_admin_password = "7s;E6W%9k3rDy}/2P8ZFUt25j@g^:v-Y"

db_instance_volume_size_in_gb = 20

db_instance_team_builder_user_name = "iB55K85pVa82AquSTz"

db_instance_team_builder_password = "3e9b4/[G74qN%-S6Nu;Qcvw6AbQ!X?^6"

# load-balancer

my_company_lb_type = "LB-S"

# team-builder instance

team_builder_instance_type = "PLAY2-NANO"

team_builder_instance_root_volume_size_in_gb = 20

# team-builder container

team_builder_container_image = "vhe74/blog-team-builder:20230915"

team_builder_container_db_schema = "my_company"

team_builder_container_db_employees_table = "employees"

# ssh

ssh_private_key_path = "~/.ssh/id_ed25519"

**Important**

Assurez-vous que la variable `ssh_private_key_path` contient le chemin correct de la clé privée correspondant à la clé publique que vous avez ajoutée dans le projet Scaleway. Cette clé privée permettra à Terraform de se connecter à l’instance, d’y copier et d’y installer les fichiers nécessaires au fonctionnement de l’API "team-builder".

Exécution

terraform init

terraform plan --var-file ./tfvars/my_company.tfvarsSi vous avez correctement exporté vos variables comme indiqué dans les pré-requis, voici le plan que Terraform devrait produire.

terraform plan :

Terraform used the selected providers to generate the following execution

plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# null_resource.reboot_team_builder_instance_after_network_setup will be created

+ resource "null_resource" "reboot_team_builder_instance_after_network_setup" {

+ id = (known after apply)

+ triggers = {}

}

# null_resource.team_builder_install will be created

+ resource "null_resource" "team_builder_install" {

+ id = (known after apply)

}

# null_resource.team_builder_run will be created

+ resource "null_resource" "team_builder_run" {

+ id = (known after apply)

+ triggers = {

+ "db_employees_table" = "employees"

+ "db_name" = "companies"

+ "db_password" = "3e9b4/[G74qN%-S6Nu;Qcvw6AbQ!X?^6"

+ "db_schema" = "my_company"

+ "db_user" = "iB55K85pVa82AquSTz"

+ "image" = "vhe74/blog-team-builder:20230915"

}

}

# scaleway_instance_private_nic.team_builder_instance_pnic01 will be created

+ resource "scaleway_instance_private_nic" "team_builder_instance_pnic01" {

+ id = (known after apply)

+ mac_address = (known after apply)

+ private_network_id = (known after apply)

+ server_id = (known after apply)

+ zone = (known after apply)

}

# scaleway_instance_security_group.my_company_security_group will be created

+ resource "scaleway_instance_security_group" "my_company_security_group" {

+ enable_default_security = true

+ external_rules = true

+ id = (known after apply)

+ inbound_default_policy = "drop"

+ name = "my-company-sg"

+ organization_id = (known after apply)

+ outbound_default_policy = "accept"

+ project_id = (known after apply)

+ stateful = true

+ zone = (known after apply)

}

# scaleway_instance_server.team_builder_instance will be created

+ resource "scaleway_instance_server" "team_builder_instance" {

+ boot_type = "local"

+ bootscript_id = (known after apply)

+ cloud_init = (known after apply)

+ enable_dynamic_ip = false

+ enable_ipv6 = false

+ id = (known after apply)

+ image = "ubuntu_focal"

+ ipv6_address = (known after apply)

+ ipv6_gateway = (known after apply)

+ ipv6_prefix_length = (known after apply)

+ name = "team-builder"

+ organization_id = (known after apply)

+ placement_group_policy_respected = (known after apply)

+ private_ip = (known after apply)

+ project_id = (known after apply)

+ public_ip = (known after apply)

+ security_group_id = (known after apply)

+ state = "started"

+ tags = [

+ "team-builder",

]

+ type = "PLAY2-NANO"

+ user_data = (known after apply)

+ zone = "fr-par-1"

+ root_volume {

+ boot = false

+ delete_on_termination = true

+ name = (known after apply)

+ size_in_gb = 20

+ volume_id = (known after apply)

+ volume_type = (known after apply)

}

}

# scaleway_lb.my_company_lb will be created

+ resource "scaleway_lb" "my_company_lb" {

+ id = (known after apply)

+ ip_address = (known after apply)

+ ip_id = (known after apply)

+ name = "load-balancer"

+ organization_id = (known after apply)

+ project_id = (known after apply)

+ region = (known after apply)

+ ssl_compatibility_level = "ssl_compatibility_level_intermediate"

+ type = "LB-S"

+ zone = "fr-par-1"

+ private_network {

+ dhcp_config = true

+ private_network_id = (known after apply)

+ status = (known after apply)

+ zone = (known after apply)

}

}

# scaleway_lb_backend.my_company_lb_backend will be created

+ resource "scaleway_lb_backend" "my_company_lb_backend" {

+ forward_port = 8080

+ forward_port_algorithm = "roundrobin"

+ forward_protocol = "http"

+ health_check_delay = "60s"

+ health_check_max_retries = 2

+ health_check_port = (known after apply)

+ health_check_timeout = "30s"

+ id = (known after apply)

+ ignore_ssl_server_verify = false

+ lb_id = (known after apply)

+ name = "team-builder-backend"

+ on_marked_down_action = "none"

+ proxy_protocol = "none"

+ send_proxy_v2 = false

+ server_ips = [

+ "192.168.0.10",

]

+ ssl_bridging = false

+ sticky_sessions = "none"

+ health_check_http {

+ code = 200

+ method = "GET"

+ uri = (known after apply)

}

}

# scaleway_lb_frontend.my_company_lb_frontend will be created

+ resource "scaleway_lb_frontend" "my_company_lb_frontend" {

+ backend_id = (known after apply)

+ certificate_id = (known after apply)

+ enable_http3 = false

+ id = (known after apply)

+ inbound_port = 80

+ lb_id = (known after apply)

+ name = "team-builder-frontend"

}

# scaleway_lb_ip.my_company_lb_ip will be created

+ resource "scaleway_lb_ip" "my_company_lb_ip" {

+ id = (known after apply)

+ ip_address = (known after apply)

+ lb_id = (known after apply)

+ organization_id = (known after apply)

+ project_id = (known after apply)

+ region = (known after apply)

+ reverse = (known after apply)

+ zone = (known after apply)

}

# scaleway_rdb_acl.my_private_network_acl will be created

+ resource "scaleway_rdb_acl" "my_private_network_acl" {

+ id = (known after apply)

+ instance_id = (known after apply)

+ region = (known after apply)

+ acl_rules {

+ description = "my_private_network"

+ ip = "192.168.0.0/24"

}

}

# scaleway_rdb_database.db will be created

+ resource "scaleway_rdb_database" "db" {

+ id = (known after apply)

+ instance_id = (known after apply)

+ managed = (known after apply)

+ name = "companies"

+ owner = (known after apply)

+ region = (known after apply)

+ size = (known after apply)

}

# scaleway_rdb_instance.my_company_db_instance will be created

+ resource "scaleway_rdb_instance" "my_company_db_instance" {

+ backup_same_region = (known after apply)

+ backup_schedule_frequency = 24

+ backup_schedule_retention = 7

+ certificate = (known after apply)

+ disable_backup = false

+ endpoint_ip = (known after apply)

+ endpoint_port = (known after apply)

+ engine = "PostgreSQL-14"

+ id = (known after apply)

+ is_ha_cluster = false

+ load_balancer = (known after apply)

+ name = "database"

+ node_type = "DB-DEV-S"

+ organization_id = (known after apply)

+ password = (sensitive value)

+ project_id = (known after apply)

+ read_replicas = (known after apply)

+ region = (known after apply)

+ settings = (known after apply)

+ user_name = "h4pHTj2d33F2JgDdC2"

+ volume_size_in_gb = 20

+ volume_type = "bssd"

+ private_network {

+ endpoint_id = (known after apply)

+ hostname = (known after apply)

+ ip = (known after apply)

+ ip_net = "192.168.0.254/24"

+ name = (known after apply)

+ pn_id = (known after apply)

+ port = (known after apply)

+ zone = (known after apply)

}

}

# scaleway_rdb_privilege.db_admin_user_privilege will be created

+ resource "scaleway_rdb_privilege" "db_admin_user_privilege" {

+ database_name = "companies"

+ id = (known after apply)

+ instance_id = (known after apply)

+ permission = "all"

+ region = (known after apply)

+ user_name = "h4pHTj2d33F2JgDdC2"

}

# scaleway_rdb_privilege.db_team_builder_user_privilege will be created

+ resource "scaleway_rdb_privilege" "db_team_builder_user_privilege" {

+ database_name = "companies"

+ id = (known after apply)

+ instance_id = (known after apply)

+ permission = "all"

+ region = (known after apply)

+ user_name = "iB55K85pVa82AquSTz"

}

# scaleway_rdb_user.db_team_builder_user will be created

+ resource "scaleway_rdb_user" "db_team_builder_user" {

+ id = (known after apply)

+ instance_id = (known after apply)

+ is_admin = false

+ name = "iB55K85pVa82AquSTz"

+ password = (sensitive value)

+ region = (known after apply)

}

# scaleway_vpc_gateway_network.my_company_gn will be created

+ resource "scaleway_vpc_gateway_network" "my_company_gn" {

+ cleanup_dhcp = true

+ created_at = (known after apply)

+ dhcp_id = (known after apply)

+ enable_dhcp = true

+ enable_masquerade = true

+ gateway_id = (known after apply)

+ id = (known after apply)

+ mac_address = (known after apply)

+ private_network_id = (known after apply)

+ updated_at = (known after apply)

+ zone = (known after apply)

}

# scaleway_vpc_private_network.my_company_pn will be created

+ resource "scaleway_vpc_private_network" "my_company_pn" {

+ created_at = (known after apply)

+ id = (known after apply)

+ name = "my-company-pn"

+ organization_id = (known after apply)

+ project_id = (known after apply)

+ updated_at = (known after apply)

+ zone = (known after apply)

}

# scaleway_vpc_public_gateway.my_company_pg will be created

+ resource "scaleway_vpc_public_gateway" "my_company_pg" {

+ bastion_port = (known after apply)

+ created_at = (known after apply)

+ enable_smtp = (known after apply)

+ id = (known after apply)

+ ip_id = (known after apply)

+ name = "my-company-pg"

+ organization_id = (known after apply)

+ project_id = (known after apply)

+ type = "VPC-GW-S"

+ updated_at = (known after apply)

+ zone = (known after apply)

}

# scaleway_vpc_public_gateway_dhcp.my_company_dhcp will be created

+ resource "scaleway_vpc_public_gateway_dhcp" "my_company_dhcp" {

+ address = (known after apply)

+ created_at = (known after apply)

+ dns_local_name = (known after apply)

+ dns_search = (known after apply)

+ dns_servers_override = (known after apply)

+ enable_dynamic = false

+ id = (known after apply)

+ organization_id = (known after apply)

+ pool_high = "192.168.0.249"

+ pool_low = "192.168.0.20"

+ project_id = (known after apply)

+ push_default_route = true

+ push_dns_server = (known after apply)

+ rebind_timer = (known after apply)

+ renew_timer = (known after apply)

+ subnet = "192.168.0.0/24"

+ updated_at = (known after apply)

+ valid_lifetime = (known after apply)

+ zone = (known after apply)

}

# scaleway_vpc_public_gateway_dhcp_reservation.my_company_pg_dhcp_res_team_builder_instance will be created

+ resource "scaleway_vpc_public_gateway_dhcp_reservation" "my_company_pg_dhcp_res_team_builder_instance" {

+ created_at = (known after apply)

+ gateway_network_id = (known after apply)

+ hostname = (known after apply)

+ id = (known after apply)

+ ip_address = "192.168.0.10"

+ mac_address = (known after apply)

+ type = (known after apply)

+ updated_at = (known after apply)

+ zone = (known after apply)

}

# scaleway_vpc_public_gateway_ip.my_company_pg_ip will be created

+ resource "scaleway_vpc_public_gateway_ip" "my_company_pg_ip" {

+ address = (known after apply)

+ created_at = (known after apply)

+ id = (known after apply)

+ organization_id = (known after apply)

+ project_id = (known after apply)

+ reverse = (known after apply)

+ updated_at = (known after apply)

+ zone = (known after apply)

}

# scaleway_vpc_public_gateway_pat_rule.my_company_pg_pat_rule_team_builder_instance_ssh will be created

+ resource "scaleway_vpc_public_gateway_pat_rule" "my_company_pg_pat_rule_team_builder_instance_ssh" {

+ created_at = (known after apply)

+ gateway_id = (known after apply)

+ id = (known after apply)

+ organization_id = (known after apply)

+ private_ip = "192.168.0.10"

+ private_port = 22

+ protocol = "tcp"

+ public_port = 2202

+ updated_at = (known after apply)

+ zone = (known after apply)

}

# time_sleep.wait_30_seconds_after_team_builder_instance_network_setup will be created

+ resource "time_sleep" "wait_30_seconds_after_team_builder_instance_network_setup" {

+ create_duration = "30s"

+ id = (known after apply)

}

# time_sleep.wait_30_seconds_after_team_builder_instance_reboot will be created

+ resource "time_sleep" "wait_30_seconds_after_team_builder_instance_reboot" {

+ create_duration = "30s"

+ id = (known after apply)

}

Plan: 25 to add, 0 to change, 0 to destroy.

─────────────────────────────────────────────────────────────────────────────

Note: You didn't use the -out option to save this plan, so Terraform can't

guarantee to take exactly these actions if you run "terraform apply" now.

Une fois le plan validé, vous pouvez l’appliquer.

terraform apply --var-file ./tfvars/my_company.tfvars

Après 4 à 5 minutes d’attente, les ressources sont toutes provisionnées.

Les ressources déployées

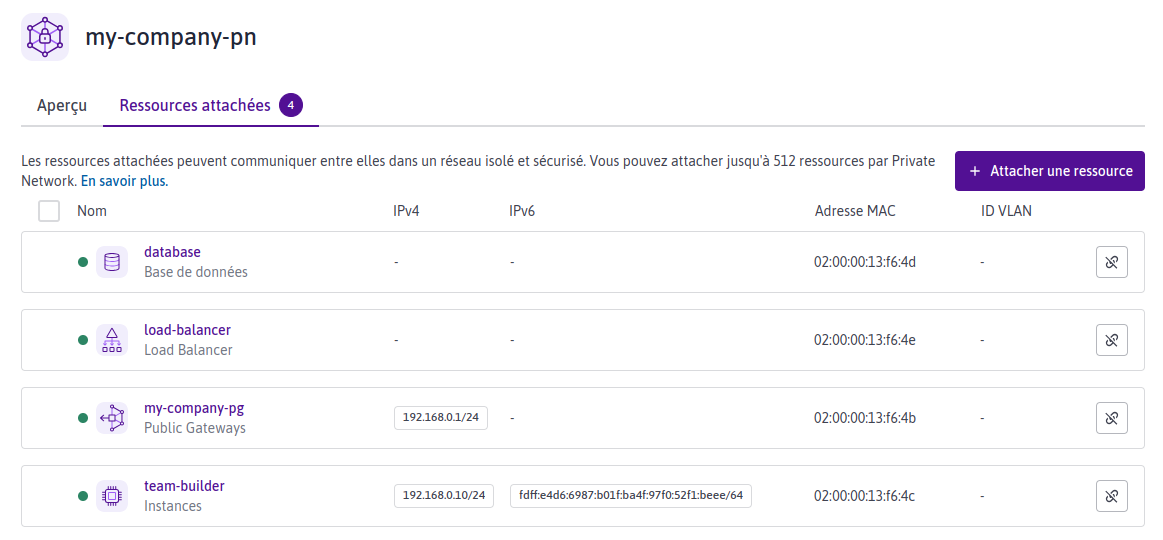

Vous pouvez consulter sur l’interface de Scaleway les ressources qui ont été déployées.Private Network

vpc : private network

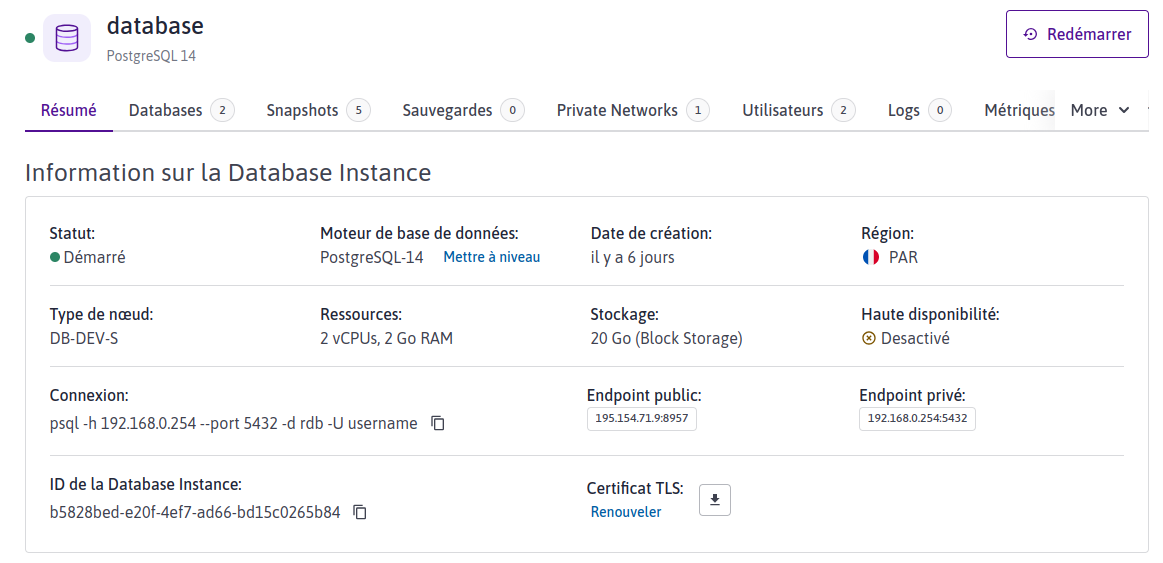

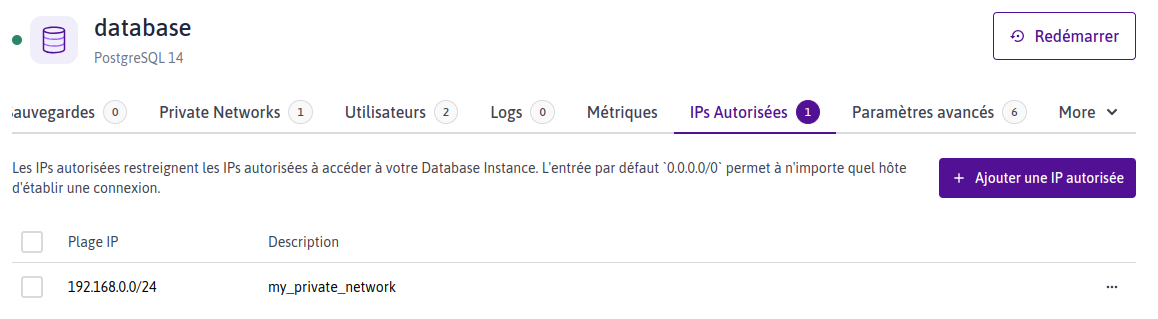

Database

database : postgresql

database : IPs autorisées

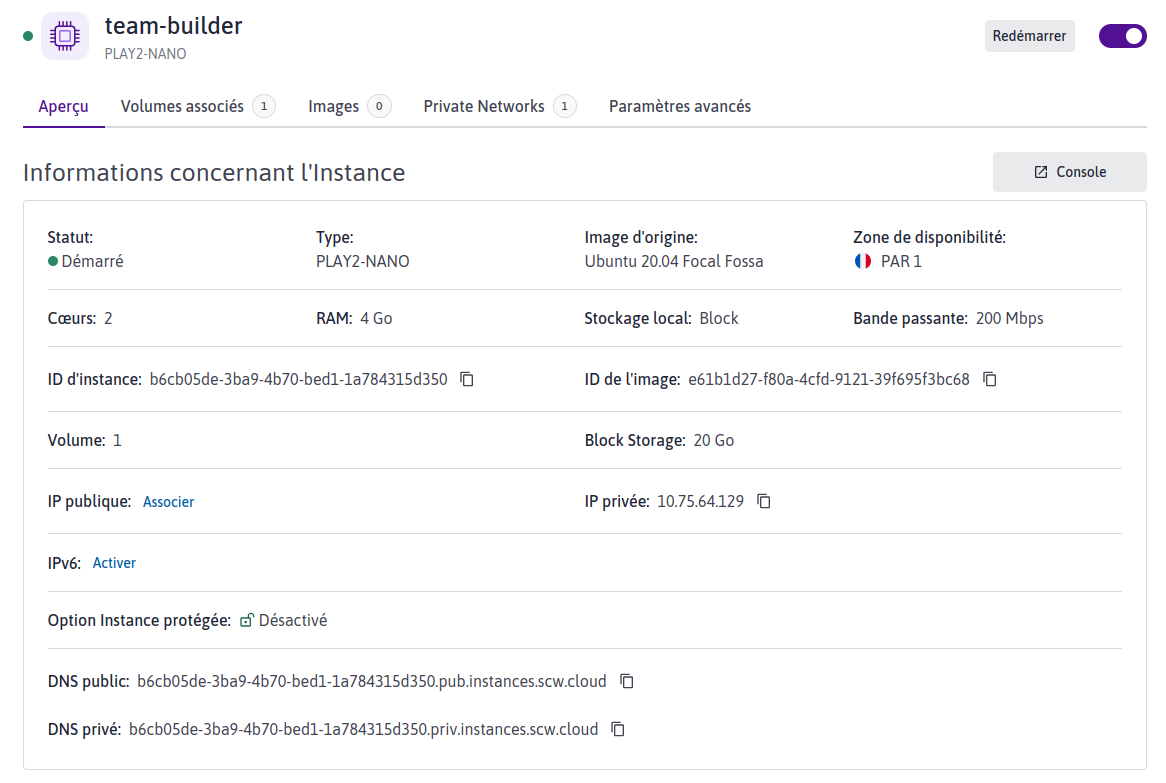

Instance

instance

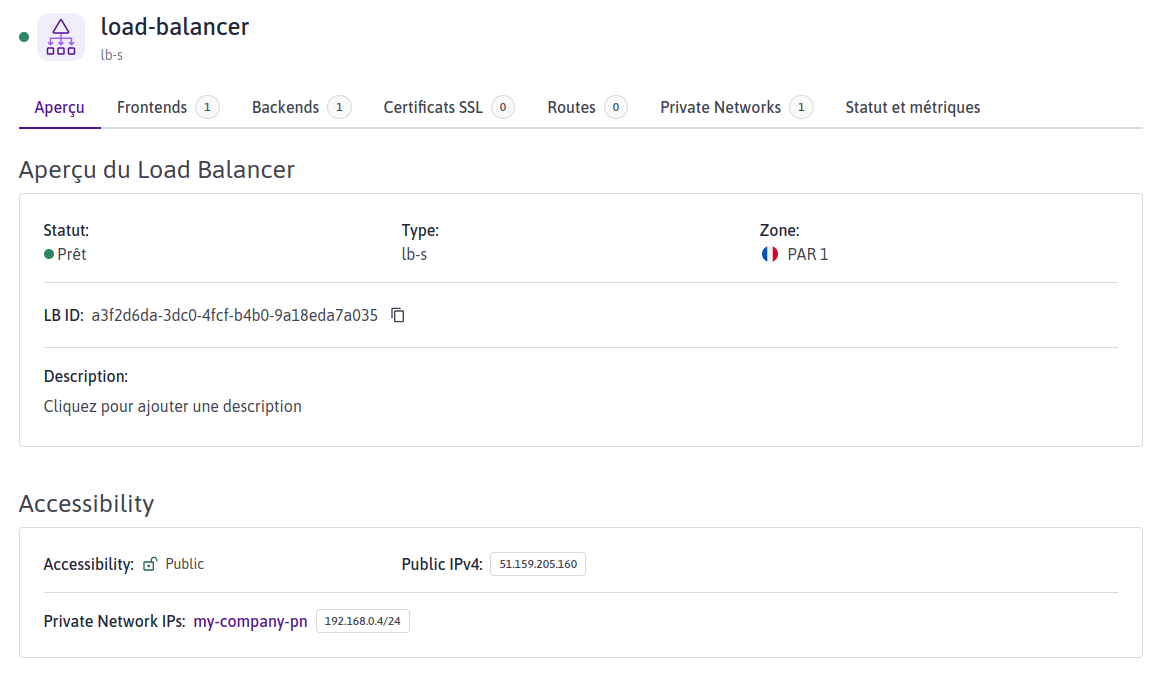

Load Balancer

vpc load balancer

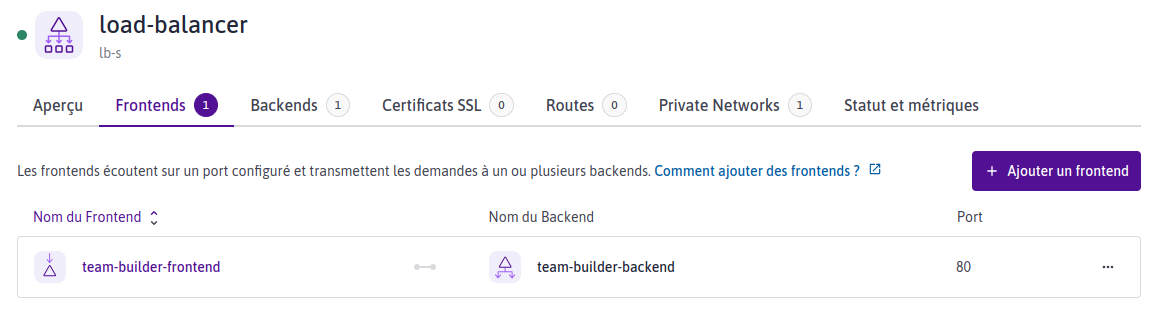

vpc load balancer frontends

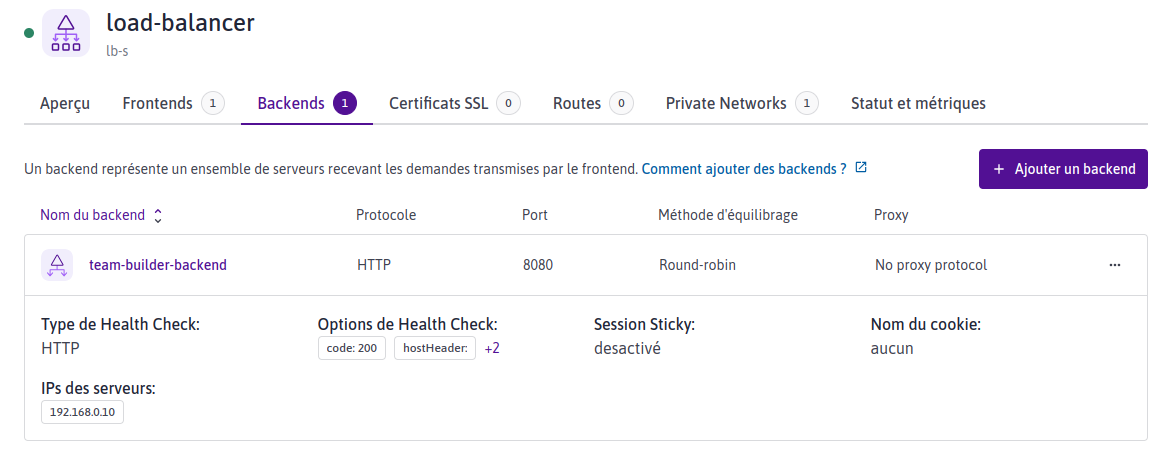

vpc load balancer backends

Utilisation des requêtes de l'API

Vous pouvez vérifier que l’API fonctionne correctement en utilisant l’IP publique du load balancer.

Voici la commande permettant d’ajouter un employé à la base de données :

curl --location --request POST 'http://51.159.205.160/employee/add' \

--header 'Content-Type: application/json' \

--data-raw '{

"email": "name2@abc.fake",

"first_name" : "Fake 2",

"last_name": "Name 2",

"jobt_itle": "developer"

}'

{"employee":{"email":"name2@abc.fake", "first_name":"Fake 2", "last_name":"Name 2", "job_title":"developer"}}

#_Remplacez l'adresse IP publique par celle de votre load balancer_

Voici la requête permettant de lister les employés de l’entreprise :

curl --location --request GET 'http://51.159.205.160/employee/list' \

--data-raw ''

{"employees":[

{"email":"name@abc.fake", "first_name":"Fake", "last_name":"Name", "jobt_itle":"developer"},

{"email":"name2@abc.fake", "first_name":"Fake 2", "last_name":"Name 2", "jobt_itle":"developer"}

]}

#_Remplacez l'adresse IP publique par celle de votre load balancer_

Connexion en ssh à l'instance

Vous pouvez vous connecter en ssh à l’instance "team-builder" en utilisant l’IP de la public gateway :

ssh root@212.47.233.139 -p 2202

#_Remplacez l'adresse IP publique par celle de votre public gateway_

Une fois connecté, vous pouvez trouver le fichier “docker-compose.yml” et l’environnement correspondant à l’API dans le dossier '/opt/team-builder/', et pouvez consulter les logs de l'API :

cd /opt/team-builder/

docker compose logs

team-builder | [GIN] 2023/09/25 - 12:43:06 | 200 | 62.176µs | 192.168.0.4 | GET "/health"

team-builder | [GIN] 2023/09/25 - 12:44:06 | 200 | 52.318µs | 192.168.0.4 | GET "/health"

team-builder | [GIN] 2023/09/25 - 12:45:06 | 200 | 33.872µs | 192.168.0.4 | GET "/health"

team-builder | [GIN] 2023/09/25 - 12:45:47 | 200 | 11.703662ms | 176.142.10.134 | GET "/employee/list"

team-builder | [GIN] 2023/09/25 - 12:46:06 | 200 | 53.33µs | 192.168.0.4 | GET "/health"

team-builder | [GIN] 2023/09/25 - 12:47:06 | 200 | 34.294µs | 192.168.0.4 | GET "/health"

team-builder | [GIN] 2023/09/25 - 12:47:38 | 200 | 15.031524ms | 176.142.10.134 | POST "/employee/add"

team-builder | [GIN] 2023/09/25 - 12:48:06 | 200 | 8.478038ms | 176.142.10.134 | POST "/employee/add"

team-builder | [GIN] 2023/09/25 - 12:48:06 | 200 | 50.565µs | 192.168.0.4 | GET "/health"

team-builder | [GIN] 2023/09/25 - 12:48:30 | 404 | 882ns | 213.32.122.81 | GET "/"

team-builder | [GIN] 2023/09/25 - 12:49:06 | 200 | 242.343µs | 192.168.0.4 | GET "/health"

team-builder | [GIN] 2023/09/25 - 12:49:54 | 200 | 3.255068ms | 176.142.10.134 | GET "/employee/list"

team-builder | [GIN] 2023/09/25 - 12:50:06 | 200 | 40.966µs | 192.168.0.4 | GET "/health"

team-builder | [GIN] 2023/09/25 - 12:51:06 | 200 | 150.942µs | 192.168.0.4 | GET "/health"

team-builder | [GIN] 2023/09/25 - 12:52:06 | 200 | 61.154µs | 192.168.0.4 | GET "/health"

Conclusion

Dans cet article, nous avons découvert comment utiliser Terraform pour créer une infrastructure fiable et sécurisée sur Scaleway. Nous avons configuré une API, une base de données PostgreSQL, un VPC, une public gateway, un load balancer et un private network.

Nous avons ainsi limité les accès indésirables à nos services sur internet et minimisé les vulnérabilités potentielles.

Par ailleurs, Terraform nous permet d’avoir une pleine maîtrise de notre stack et de son état, ainsi que d’envisager des flux de déploiement continu.

Vous pouvez consulter l’intégralité du projet sur Github