Running a Spark job

In order to correctly and efficiently run a Spark job on Datatask, please consider the following steps.

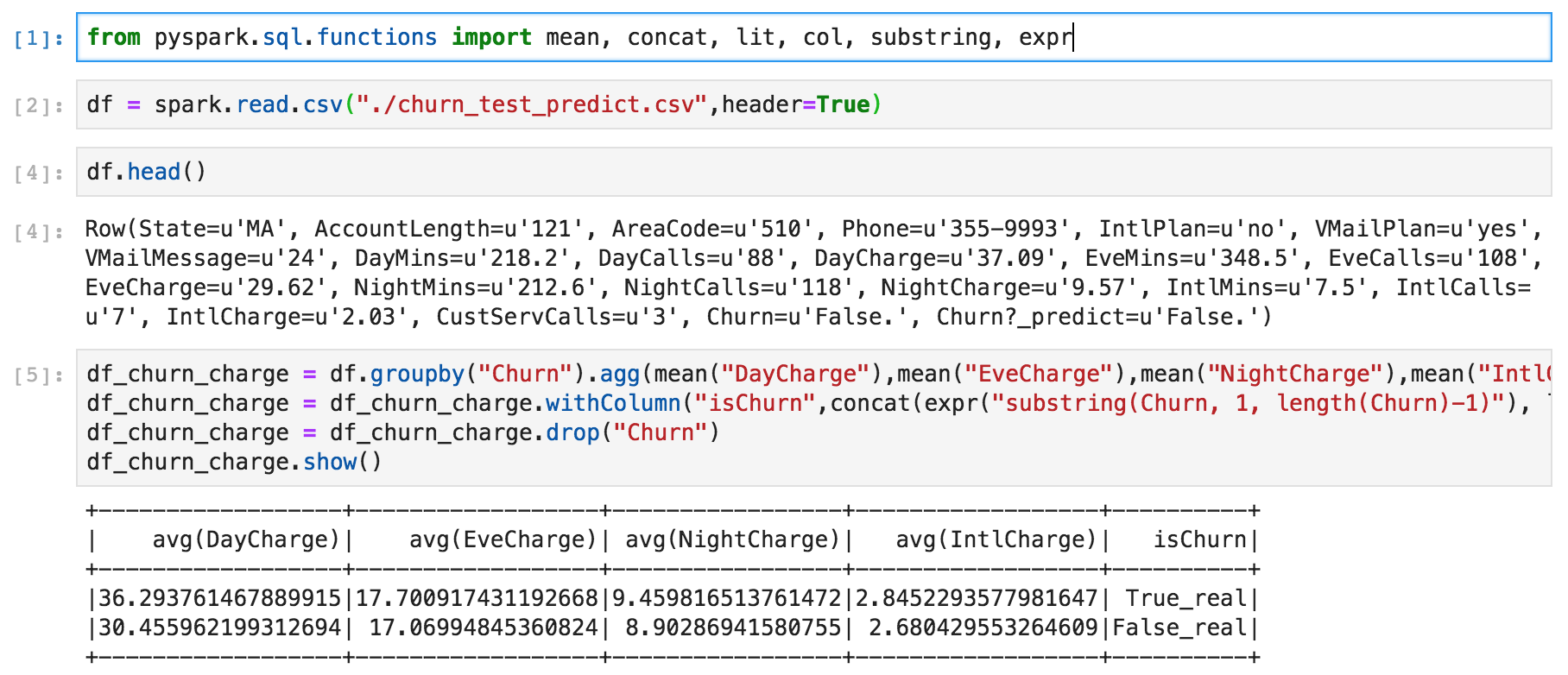

Development

The Jupyter Lab associated with DataTask is the best tool to prepare your development. With the right kernel, you will be able to submit cells and prepare your code.

Then, you can build your own script.

-

input : it will be developped in a section below

-

output : for most of users, files are generated. Please consider that your job will natively be able to write on Google Cloud Storage by specifying a full GCS path

Prepare job submission

Configuration

Create a config file, param.json, with the following fields :

-

project_id : the Google Cloud project

-

region : the Google Cloud region for the Spark cluster creation

-

zone : the Google Cloud zone for the Spark cluster creation

-

config_job :

-

bucket : the Google Cloud Storage bucket where your script will be upload

-

py_spark : the name of your script

-

args : a list of the args that will be used as input of your script. For example, in a pySpark script, you will be able to use them with

sys.argv[1] -

image : the path to the Spark image that will be used for the master and nodes of the cluster

-

num_master : the number of masters in the cluster

-

type_master : the masters' machine type

-

num_worker : the number of workers in the cluster

-

type_worker : the workers' machine type

-

num_preemptible_worker : the number of preemptible workers in the cluster

Git

To be able to deploy your job :

-

use, duplicate and rename the template folder

submit_dataproc_job- with all its content - and add your script inside thecodefolder -

update the

Dockerfileby replacing the filename inADD local_script.py /script/with your filename

Do not forget to commit your changes.

Run your job

To correctly run your Spark job, you can either deploy your job or create a pipeline. If you deploy your job :

-

specify your Google Cloud Storage bucket (env variable bucket_source in manifests) that will host the Spark config. It has to be specified in your new submit_dataproc_job job manifest

-

create a folder in this bucket and add the config file (

param.jsonexplained above). The folder name has to be the job name -

deploy your job in Datatask

This job will create the Dataproc cluster, submit your job and shutdown the cluster.

If you create a pipeline, it can be useful to use the same cluster for several jobs. In this case, follow these steps :

-

create a folder in Git with your pipeline configuration (

pipeline.json) with at least the following steps : -

create Dataproc cluster : this job will create the desire Spark cluster

-

submit Dataproc job : specific for your job - this job will submit your script to the cluster and run it

-

delete Dataproc cluster : this job will delete the Spark cluster

-

specify your Google Cloud Storage bucket (env variable bucket_source in manifests) that will host the Spark config. It has to be specified in create_dataproc_cluster,submit_dataproc_job and delete_dataproc_cluster jobs manifest

-

create a folder in this bucket and upload the config file (

param.jsonexplained above) without the keyspy_sparkandargs. The folder name has to be the pipeline name -

inside this pipeline folder, create a folder for your submit_dataproc_job and upload a config file

param.jsonwith{"config_job":{"py_spark":"","args":[""]}}(the missing informations from the global config file). This step is similar for a non Spark job. This folder name has to be the job name -

start your pipeline in Datatask

You can also apply other local or Spark jobs before deleting the Dataproc cluster. If you do not want to use the same cluster for several jobs of your pipeline, do not include create and delete Dataproc cluster jobs. You will not have to create a config file inside your pipeline folder, only inside your jobs' folders.